Beyond Boundaries is a 2-player cooperative puzzle game inspired by Portal, developed as part of the “Interaction in Mixed Reality – Basic Concepts” lecture. In the game, two players must collaborate to navigate complex levels using portals, gesture-based interactions, and weight-sensitive objects. The game focuses on teamwork, spatial awareness, and creative problem-solving in a mixed reality environment.

Gameplay

Players control two characters with distinct interaction methods. Corey uses a traditional controller to move, place portals, and manipulate objects, while Lumen relies entirely on hand gestures. Lumen places portals by drawing circles in the direction they should appear, and each player can traverse both their own and the other player’s portals.

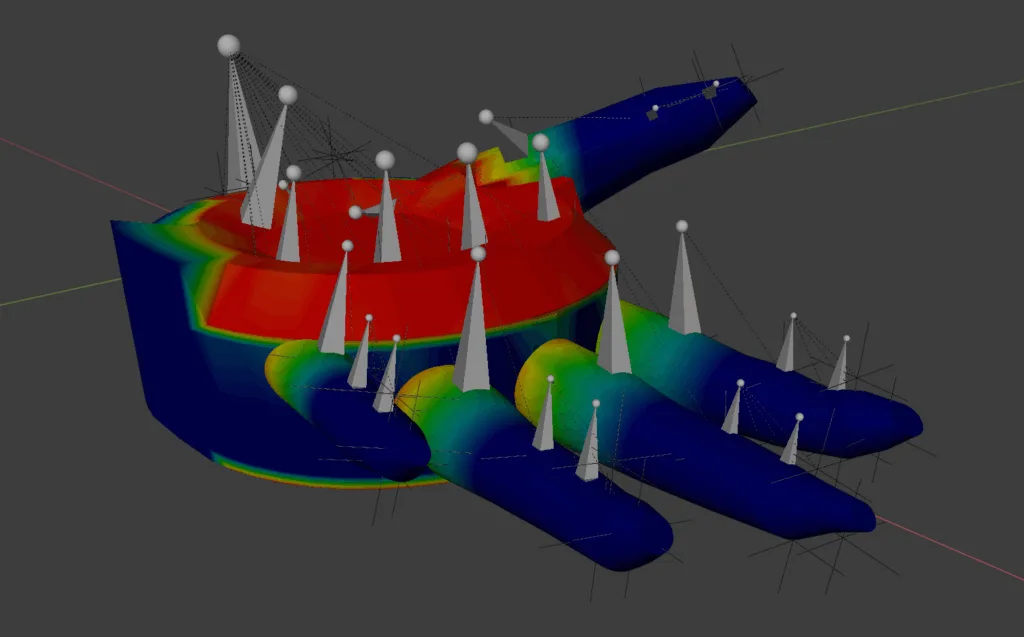

Central to the puzzles is the Weighted Interaction Cube, which players can grab and move. Its weight can be adjusted by scaling it with both hands, and it can travel through portals. The Weighted Cube Detector acts as a scale, activating only when the cube’s weight matches its displayed range, with visual feedback in blue, red, and green to indicate standby, incorrect, or correct weight, respectively. Lumen can also activate Spawn Tubes by performing a thumbs-up gesture, which creates new weighted cubes.

The game culminates in a cooperative twist on Simon Says: two elevators present the players with a sequence that only one sees, requiring the other to replicate it. This design emphasizes communication and coordination.

Challenges

Creating Beyond Boundaries involved several technical hurdles. Teleportation through portals required the addition of an invisible RigidBody because Unity’s CharacterController only simulates physics. A small force was applied at the portal exit to prevent players from getting stuck. Recreating the iconic “look-through” portal effect in VR proved tricky, as the stereoscopic rendering of the Meta Quest 3 distorted textures. Achieving the effect properly would have required separate cameras for each eye and adjusted rendering calculations.

Additionally, a custom hand model for Lumen had to accurately match hand gestures, which initially interfered with gesture detection. Overcoming this challenge provided valuable experience in integrating physical gestures with MR interactions.